QUT researchers working on how robots can be taught to grasp objects in real-world situations have received a US$70,000 research grant from ecommerce giant Amazon.

The Amazon grant coincides with the publication of a separate ground-breaking study involving QUT researchers on robot grasping.

QUT robotics researcher Distinguished Professor Peter Corke, founding director of the Australian Centre for Robotic Vision headquartered at QUT, and Research Fellow Fellow Dr Jürgen ‘Juxi’ Leitner received the Amazon award in recognition of their world-leading research into vision-guided robotic grasping and manipulation.

Professor Corke and Dr Leitner led a Centre team to victory in winning the $80,000USD first prize at the 2017 Amazon Robotics Challenge in Japan.

“Real-world manipulation remains one of the greatest challenges in robotics,” Professor Corke said.

“So, it’s exciting and encouraging that Amazon is throwing its support behind our work in this field.”

Dr Leitner, who heads up the Centre’s Manipulation and Vision program described the Grasping with Intent project which has been recognised with the Amazon grant as ambitious and unique.

“While recent breakthroughs in deep learning have increased robotic grasping and manipulation capabilities, the progress has been limited to mainly picking up an object,” Dr Leitner said.

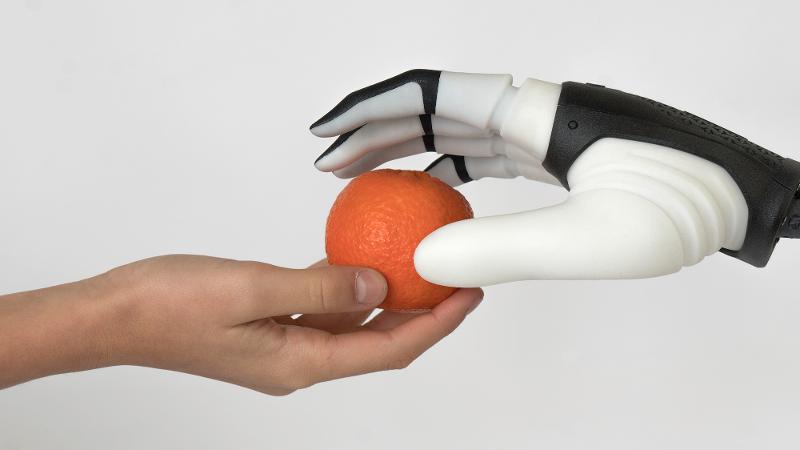

“Our focus moves from grasping into the realm of meaningful vision-guided manipulation. In other words, we want a robot to be able to seamlessly grasp an object ‘with intent’ so that it can usefully perform a task in the real world.

“Imagine a robot that can pick up a cup of tea or coffee, then pass it to you!”

The ground-breaking study published today in Science Robotics by a research team of The BioRobotics Institute of Scuola Superiore Sant’Anna and the Australian Centre for Robotic Vision reveals guiding principles that regulate choice of grasp type during a human-robot exchange of objects.

The study analysed the behaviour of people when they have to grasp an object and when they need to hand it over to a partner.

The researchers investigated the grasp choice and hand placement on those objects during a handover when subsequent tasks are performed by the receiver.

In the study, people were asked to grasp a range of objects and then pass them to another person. The researchers looked at the way people picked up the objects, including a pen, a screw driver, a bottle and a toy monkey, passed them to another person and how the person then grasped those objects.

Passers tend to grasp the purposive part of the objects and leave “handles” unobstructed to the receivers. Intuitively, this choice allows receivers to comfortably perform subsequent tasks with the objects.

The findings of the research will help in the future design of robots that have the task of grasping objects and passing them.

Dr Valerio Ortenzi, Research Fellow at the Australian Centre for Robotic Vision and one of the principal authors of the paper, said grasping and manipulation was regarded as very intuitive and straightforward actions for humans.

“However, they simply are not,” Dr Ortenzi said. “We intended to shed a light on the behaviour of humans while interacting in a common manipulation task and a handover is a perfect example where little adjustments are performed to best achieve the shared goal to safely pass an object from one person to the other.”

Professor Corke said real-world manipulation remained one of the greatest challenges in robotics.

“We strive to be the world leader in the research field of visually-guided robotic manipulation,” Professor Corke said.

“While most people don’t think about picking up and moving objects – something human brains have learned over time through repetition and routine – for robots, grasping and manipulation is subtle and elusive.”

Main image credit: © Elastico Disegno - www.elasticofarm.com

Media contact:

Rod Chester, QUT Media, 07 3138 9449, rod.chester@qut.edu.au

After hours: Rose Trapnell, 0407 585 901, media@qut.edu.au