Tim Wilkes, 9 September, 2022

Peculiarities, possibilities, and alchemy

It seems that people have been talking about Artificial Intelligence (A.I) as either the saviour or the destroyer of humankind for ages.

From Will Rodgers robot in “Lost in Space” to HAL the enigmatic A.I in “2001 A Space Odyssey” or even the creation of Cyberdine Systems in “Terminator.”

Positive A.I. role models from the realms of popular fiction are somewhat harder to come by. Off the top of my head, I can come up with Jarvis, Tony Stark’s A.I assistant from “Avengers” and that’s about it.

Outside the realms of fiction, the narrative seems to swing between doom. “The robots are gonna’ take our jobs” or a euphoric proclamation that A.I will miraculously solve all of our woes.

As for me. I sit somewhere in the middle.

I think that A.I. can, and indeed does help us in many truly amazing ways but also that applications of A.I frequently has very peculiar results.

I think that there is a “secret formula” which if executed well has brilliant results.

But all too often one (or more) of the elements is a bit wobbly and that can have some quite odd consequences.

Here’s my formula for making magic with A.I.

Data + Human + A.I = Alchemy

The problem to perfect execution is that each one of these has some intrinsic flaws.

Let me show you what I mean.

THE PROBLEM WITH DATA: The word “data” no longer means “useful information”; instead, it has come to refer to that super abundant yet unrepresentative morass of all information which happens to be numerically expressible. Spreadsheets everywhere are full of it.

Many organisations seem to worship it with almost religious fervor and treat it as the oracle to future success and prosperity.

In fact, you can’t help noticing that many organisations seem to be incapable of acting unless it can be unequivocally justified numerically on a spreadsheet.

It seems to me that a business which is incapable of action except based on numerical information can look quite clever but is at permanent risk of acting very dumb.

It’s pretty obvious that numerically expressible data can’t tell us everything. How do you measure “hope”, “regret” or “doubt” for example.

Even if you do have a bucket load of data that can be expressed numerically. The interpretation of that is far from obvious or certain. To see exactly what I mean, check out Simpson’s Paradox that clearly shows that the less a student studies, the better they score on tests.

I’m sure that many people have looked at what the “data” was telling them and thought “that doesn’t feel right” but lacked the authority, courage or maybe the language to challenge it.

As Jeff Bezos once commented, "When the anecdotes and the data disagree, the anecdotes are usually right.”

Then there is of course “BIG DATA.”

I say BEWARE OF BIG DATA: it all comes from the same place. The Past!

You may have heard the term “Management by the rear-view mirror”. This is the comfortable idea that analysing what has already happened will provide a clear, safe, and lucrative path into the future.

If that were true, there would be a great many things that wouldn’t exist today simply because there was no justification in historical data.

Take the Dyson Vacuum cleaner for example.

Would historical data have predicted a vibrant market for a $1,500 vacuum cleaner (or the Dyson hair dryer for that matter)?

Past data would have shown that a vacuum cleaner is a “distress purchase” you only buy one when the one you have breaks. The top end of the market at the time of release was probably the Miele at half the price.

The data would simply have said “No!”

Yet here we are. Almost everyone I know has a Dyson vacuum. James Dyson is a billionaire, and my wife wants a new hairdryer.

If the real world has taught us anything it is that past/historical data is flawed, incomplete and often a poor guide to the future.

What about the second part of the formula?

THE PROBLEM WITH HUMANS: There is a huge, often invisible or ignored human element in the universe of A.I. Clearly the creativity, insights and intuitions of the “human” are essential to make A.I useful.

But beware! This brings our very human assumptions, individual world views and biases into play. Often in quite peculiar ways.

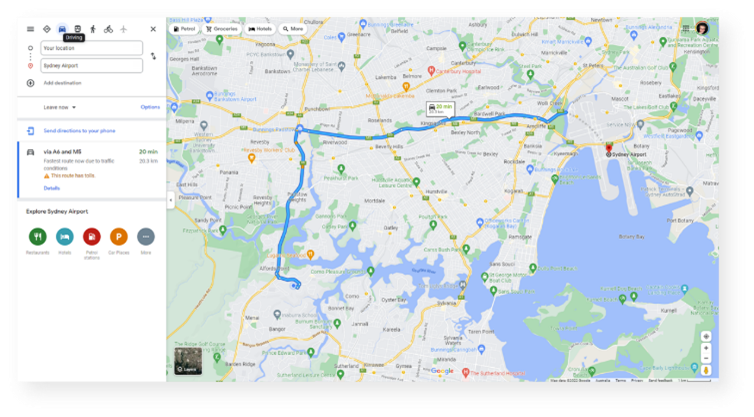

We’ve all used Google Maps to plan a route. Perhaps from home to the airport.

Here’s my friend Google showing me how to drive to the airport from my home in Sydney.

Mmmm, I think to myself. Maybe I’ll take the train instead…

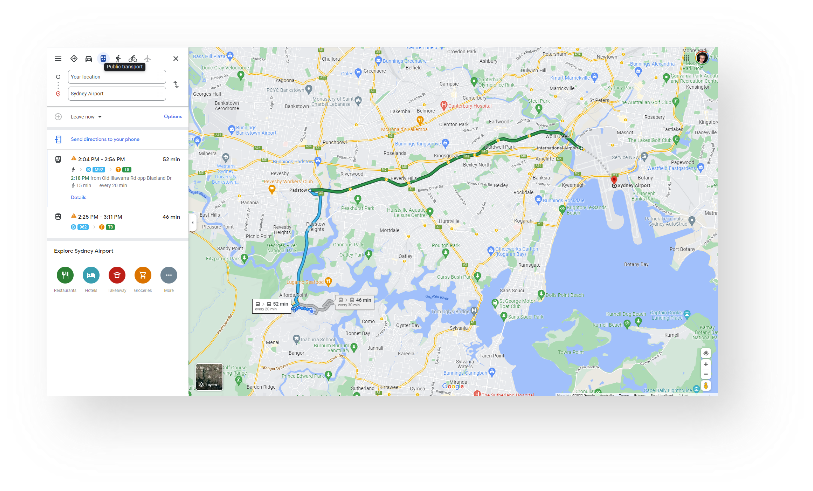

Here’s Google showing me how to catch public transport to the airport from my home in Sydney.

But wait. That’s not right is it.

The choice for pretty much everyone in the world is not car OR public transport.

The travel method is car AND public transport. We will drive to the closest station, park, and then catch the train to our destination.

How come The Google assumes that we will either drive or take public transport but never a combination of both?

Why this is the case would likely be a complete mystery if you didn’t know that the writers of the algorithm where mostly from Southern California where the only reason that you’d take public transport is if you don’t own a car.

In Southern California using both is unfathomable and thus Google Maps ignores the very possibility!

The technology behind Google Maps and the A.I is simply brilliant but remains as biased and flawed as the “human ex machina”.

So, if the flaws of data and the peculiarities of humans come together to make an A.I what might happen?

In the word’s of Jeremy Clarkson, “How hard can it be?”

THE PROBLEM WITH A.I: I make no bones about A.I. being borderline magical. If there was ever a proof needed for Asimov’s famous quote, “Any sufficiently advanced technology is indistinguishable from magic”. Then contemporary A.I is it.

But I do think there are a few hidden problems (no I’m not going to delve into ethics and morality here).

The main problem I believe, is that A.I is often making choices for us without us realizing that it’s happening.

The power of “defaults” is well known to behavioural science. Our human brains are intrinsically lazy and are prone to accept the default or easy choice offered to us.

This can lead us to make decisions and choices without understanding why. Often without giving the choice a single moment’s thought.

This can lead to some surprising and quite bizarre outcomes.

Bizarre A.I. Outcome #1

Let’s stay with the GPS navigation theme for starters.

When you are planning any trip, the default journey optimisation is quickest (shortest duration). You can sometimes choose to “avoid tolls” but that’s about it.

For me (I live in Sydney) if I’m driving to the airport, my GPS will usher me down the M5 freeway. Most of the time this will indeed be the “fastest”

But every once in while the M5 will be a disaster. A breakdown or accident will turn freeway into car park. With no chance of escape, a 30-minute journey can turn into hours resulting in a missed flight.

Strangely if an experienced taxi driver takes me to the airport, they will rarely take the freeway.

Why is this? Because whilst the freeway is usually the fastest it also has the highest potential variability. The non-freeway route might be longer on average but will never be so long that I’d risk missing my flight.

Sometimes “lowest variability” trumps the A.I. default setting “shortest duration”

I might also suggest that “most scenic”, “best driving road” or even “avoid speed bumps” would be preferable to the usual (unquestioned) default in many circumstances.

Bizarre A.I. Outcome #2

This one’s a little more controversial. An example of distorted choices.

Dating apps!

Specifically, how the algorithm influences client’s choices on who to date.

One of the top criteria presented to women searching for a man is height.

A common specification for this parameter is “Over 6ft.”

Is this really how you want to make your choice?

Or is it just an easy characteristic to present and is thus part of the choice-architecture you are presented with?

(By the way, you just filtered out Brad Pitt: 5"10, George Clooney: 5"10, Tom Hardy: 5"9, Harry Styles: 5"10)

My advice is to always be wary of who (or what) is guiding your selections. Is that the stuff that’s truly important to you?

Okay, so that’s some of the things to watch out for.

NOW FOR SOME ALCHEMY: Remember when I said that IF all the elements are brought together in perfect symbiosis, then we can get some truly magical outcomes?

Data + Human + A.I = Alchemy

Here are some examples of A.I alchemy at work in our everyday lives.

A.I. ALCHEMY: Making things easier

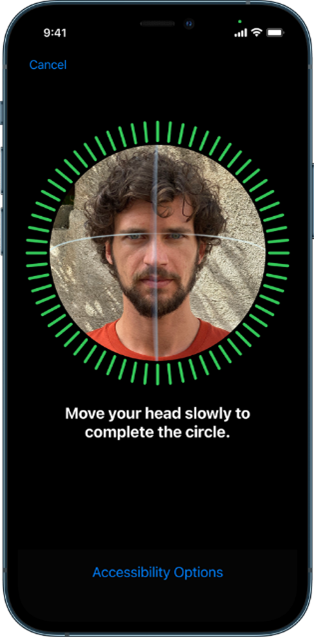

Simple, magical things that we take for granted like facial recognition making both securing and unlocking your phone a snap! (This is especially useful if you work for a security conscious organisation that insists on you having a mile-long passcode!)

Simple, magical things that we take for granted like facial recognition making both securing and unlocking your phone a snap! (This is especially useful if you work for a security conscious organisation that insists on you having a mile-long passcode!)

The magic of digital assistants doing everything from checking the weather, setting reminders, turning on lights and timing my morning boiled eggs.

And where would we all be without spell-check and autocorrect watching over our shoulders. I still remember the days of “whiteout” correction fluid before I could just type and get the A.I to fix it all later.

A.I. ALCHEMY: Making life safer

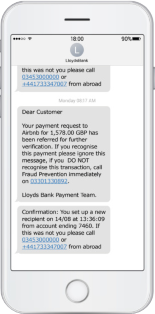

“Hello, did you just make a purchase in Venezuela?”

Online fraud detection monitoring billions of transactions looking for anomalies that could mean your account is about to be emptied by some faceless bandit.

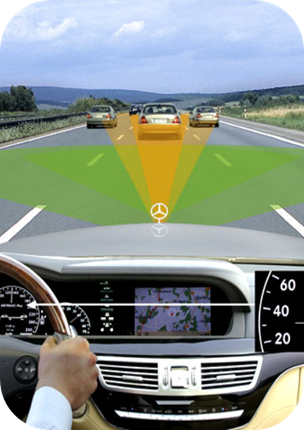

Vehicle A.I that provides collision avoidance. Always alert for that moment when something unexpected happens in the split-second of distraction.

A.I. ALCHEMY: helping businesses to run better

About now you are probably wondering what SAP, the world’s largest provider of business systems and application is doing in the world of A.I.

Well about a decade ago we created the world’s first business A.I.

A powerful A.I focussed 100% on helping business.

We were so excited that we mostly forgot to tell anyone!

Today there are around a thousand A.I powered processes sat waiting to be called upon.

Things like account intelligence helping organisations to care better for their customers by monitoring interaction, understanding history and identifying trends that allow us to anticipate and respond to customer needs with amazing service.

Far more boring things like learned assignments & reconciliation using A.I to provide frictionless and completely automated clearing (puzzlingly accountants get excited by this. Who knew?)

But also, potentially life-changing things like Digital Aged Care. Using wearable devices, motion detection and voice assistants to create an “always aware” A.I to monitor the activity and wellbeing of vulnerable people in their homes and triggering response activities if needed.

And perhaps even planet changing things using advanced A.I to optimize for carbon reduction across entire business ecosystems (e.g Catina-X). Imagine a globally visible, constantly up-to-date register of the carbon footprint of the worlds trade… and the application of A.I to minimize (even eliminate) waste and carbon!

And so, I rest my case for the potential of A.I to create genuine alchemy in the world.

Of course, it’s not without its faults, foibles, and peculiarities, but if the elements can be brought together in perfect symbiosis. Then we can make all our lives easier and safer, can help the world to run better and maybe solve some of the world's most wicked problems.