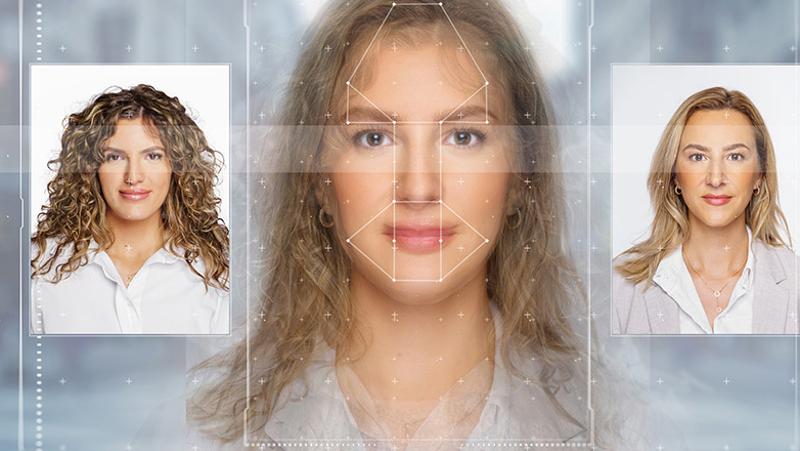

Organisations are at high risk of reputational damage, extortion and IP theft by synthetic media - AI generated content, such as deepfakes, are so realistic that humans can’t tell them apart from authentic media up to 50 per cent of the time.

- Extortion, blackmail, IP theft just some scams enabled by new type of scam

- Researchers have created a playbook for organisations to deal with the looming risk

- Deepfakes created by artificial intelligence virtually indistinguishable from the real thing

- Realistic images and voices can be generated from a text description

PhD researcher Lucas Whittaker, from QUT’s Centre for Behavioural Economics, Society and Technology (BEST), said because synthetic media could be hard to distinguish from authentic media, the risk of an attack was high for all organizations, large or small, private or public, service or product oriented.

In research published in Business Horizons, Mr Whittaker and co researchers analysed business’ looming risks from synthetic media and produced a playbook for organisations to prepare for, recognise and recover from synthetic media attacks.

“It’s increasingly likely that individuals and organisations will become victims of synthetic media attacks, especially those in powerful positions or who have access to sensitive information, such as CEOs, managers and employees,” Mr Whittaker said.

Lucas Whittaker, Dr Kate Letheren.

“Inauthentic, yet realistic, content such as voices, images, and videos generated by artificial intelligence, which can be indistinguishable from reality, enable malicious actors to use synthetic media attacks to extract money, harm brand reputation and shake customer confidence.

“Artificial intelligence can now use deep neural networks to generate entirely new content because these networks act in some ways like a brain and, when trained on existing data, can learn to generate new data.”

Mr Whittaker said bad actors could collect voice, image or video data of a person from various sources and put them into neural networks to mimic, for example, a CEO’s voice over the phone to commit fraud.

“We are getting to the point where photorealistic images and videos can be easily generated from scratch just by typing a text description of what the person looks like or says,” he said.

“This alarming situation has led us to produce a synthetic media playbook for organisations to prepare for and deal with synthetic media attacks.

“For instance, start-ups around the world are offering services to detect synthetic media in advance or to authenticate digital media by checking for synthetic manipulation, as it so easy to trick people into believing and acting upon synthetic media attacks.

“Organisations are moving to protect image provenance, particularly through blockchain-based digital signatures that verify whether media has been altered or tampered with.”

Co-researcher Professor Rebekah Russell-Bennett, who is co-director of BEST, said the increasing accessibility of tools used to generate synthetic media meant anyone whose reputation was of corporate value must be aware of the risks.

“Every CEO or brand representative who has a media presence online or has been featured in video or audio interviews must brace themselves for impersonation by synthetic media,” Professor Russell-Bennett said.

“Their likeness could be hijacked in a deepfake that might, for example, put the person in a compromising situation or their voice synthesised to make a false statement with potentially catastrophic effects, such as consumer boycotts or losses to their company’s share value.

“Every organisation should have a synthetic media incident response playbook which includes preparation, detection, and post-incident procedures.

“Everyone in the organisation must be aware of potential threats, know how to protect themselves, and understand how to escalate emerging incidents.

“Establishing incident response teams to assess reports of suspicious activity, understanding what attackers might try to target, containing and reporting threats, and engaging with cybersecurity organisations to identify technological weaknesses are vital measures.”

The research was conducted by a team comprising Mr Whittaker, Dr Kate Letheren, Professor Rebekah Russell-Bennett (QUT), Professor Jan Kietzmann (University of Victoria, Canada) and Dr Rory Mulcahy (University of the Sunshine Coast).

Brace yourself! Why managers should adopt a synthetic media incident response playbook in an age of falsity and synthetic media was published in Business Horizons.

QUT Media contacts:

Niki Widdowson, 07 3138 2999 or n.widdowson@qut.edu.au

After hours: 0407 585 901 or media@qut.edu.au.